- News: SpAtten is spotlighted on MIT Homepage.

- News: SpAtten is covered by MIT News.

- News: SpAtten is accepted by HPCA 2021.

- News: HAT is covered by MIT News and VentureBeat.

- News: HAT is accepted by ACL 2021.

- News: Lite Transformer is accepted by ICLR 2020.

- News: SpArch is accepted by HPCA 2020.

- News: MicroNet Compression is accepted by JMLR.

- News: Our team wins champion of Neurips 2019 MicroNet Efficient NLP challenge.

Related projects:

Efficient NLP Full-Stack Innovations

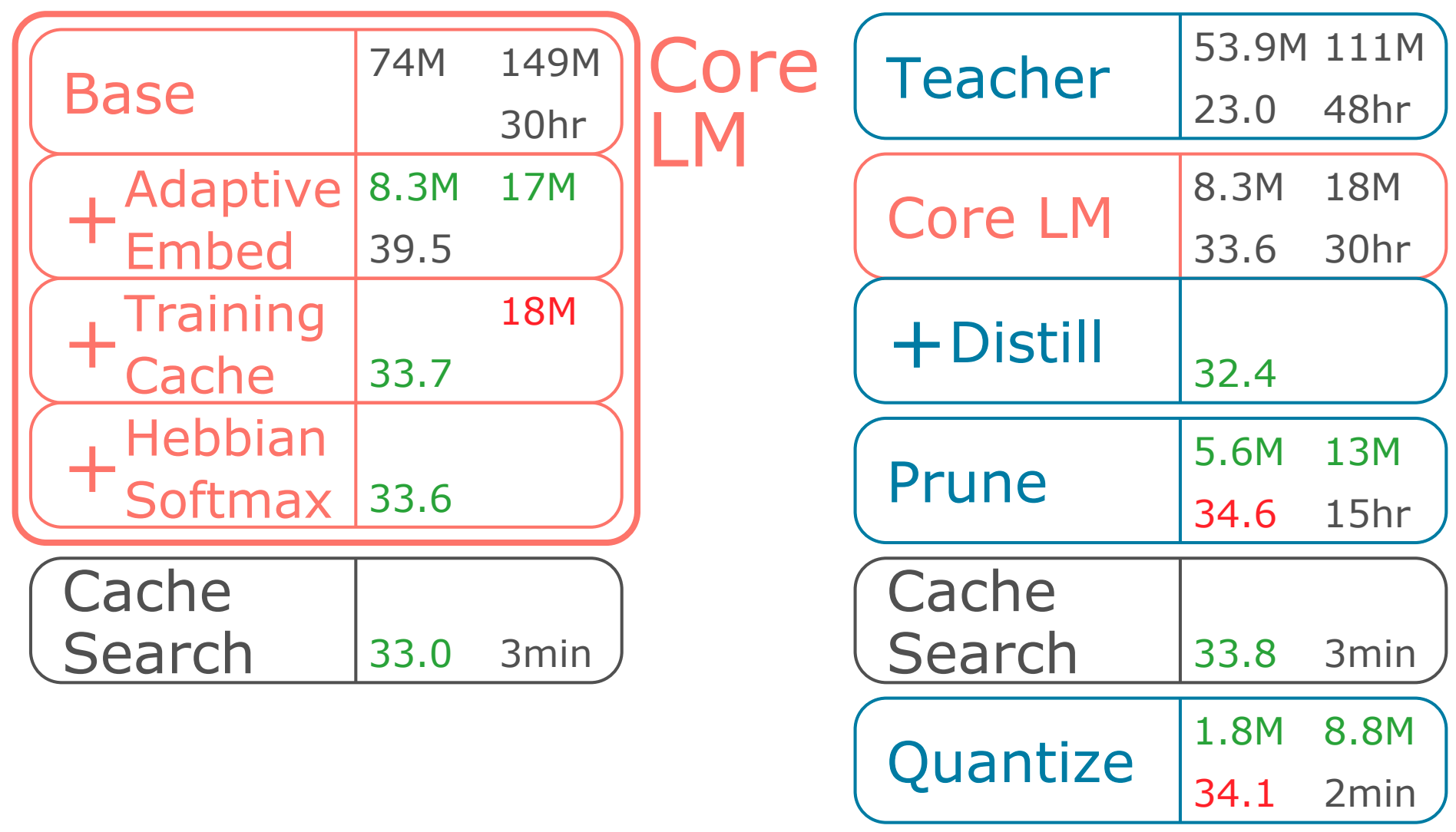

The model size and computation of NLP models are increasing exponentially. That requires innovations across the full stack, from algorithm to hardware. Our goal is to push the frontier of green, environmental friendly and sustainable NLP by reducing the model inference and training cost, and democratize NLP by enabling them on affordable low-end hardware devices which are accessible to all people. Specifically, the HAT Hardware-Aware Transformer NAS proposes an efficient NAS framework to search for specialized models for target hardware platforms with hardware feedback (e.g., energy, latency) in the loop. Lite Transformer proposes a new hybrid convolution-attention operation. SpAtten is an algorithm-hardware co-design accelerator with support of token and head pruning and progressive quantization. It accelerates NLP models by removing sentence redundancy. MicroNet compression experiments with weight pruning and quantization of language models. SpArch accelerator accelerates sparse matrix multiplications for sparse FC in NLP layers by jointly optimizing input and output data reuse. Click blocks below to jump to corresponding section.

SpAtten Accelerator

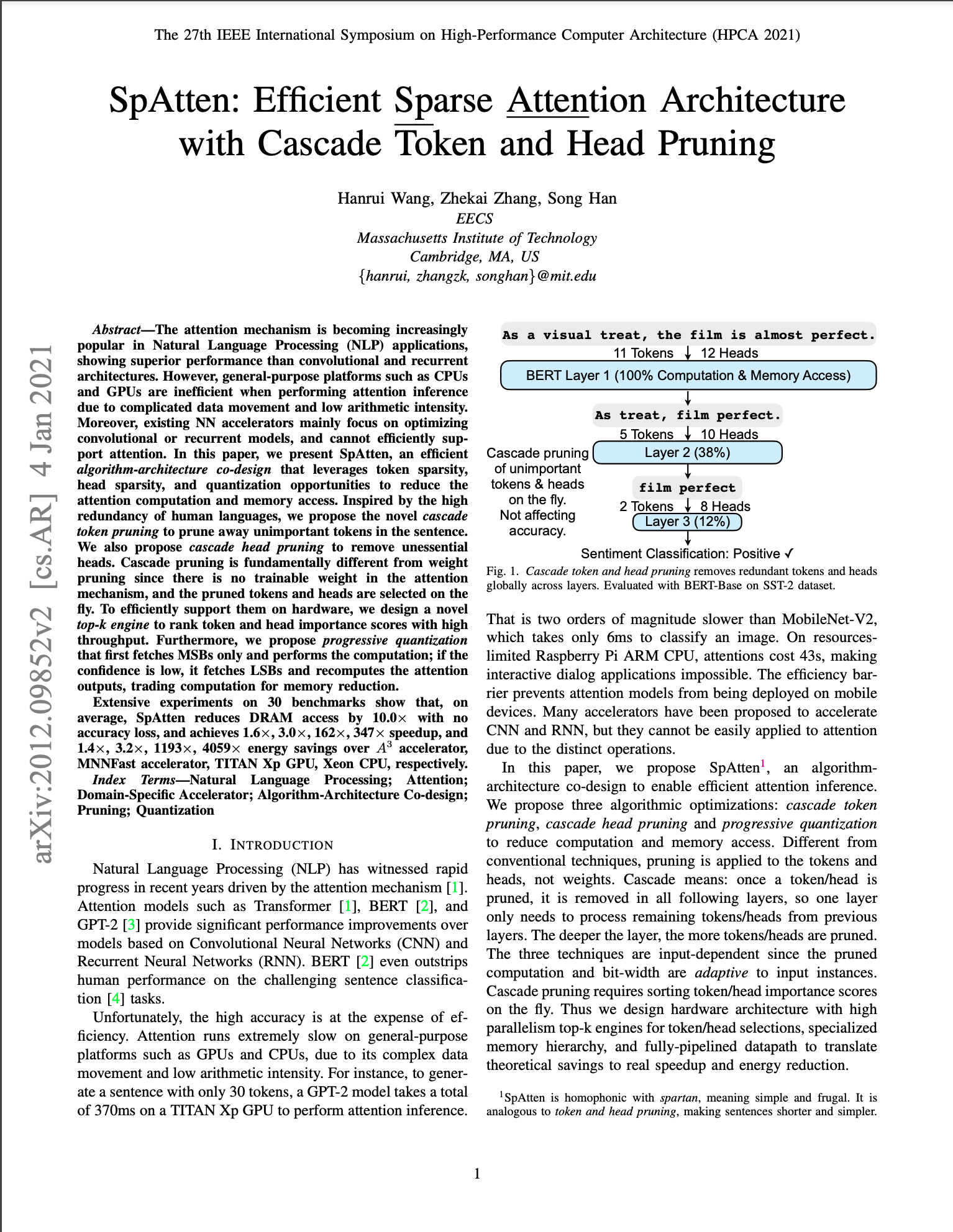

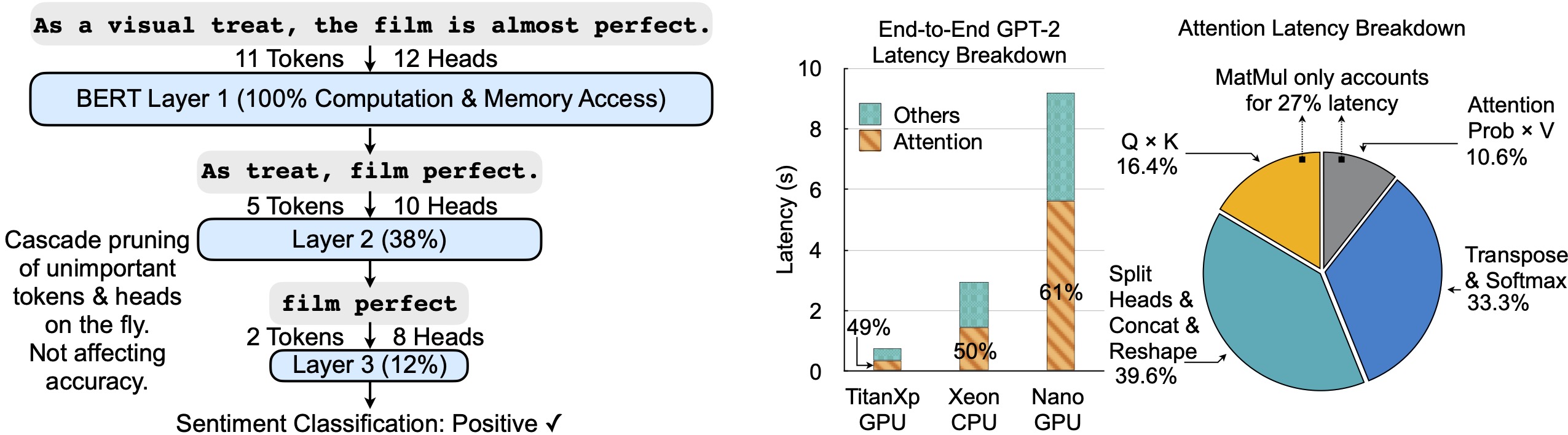

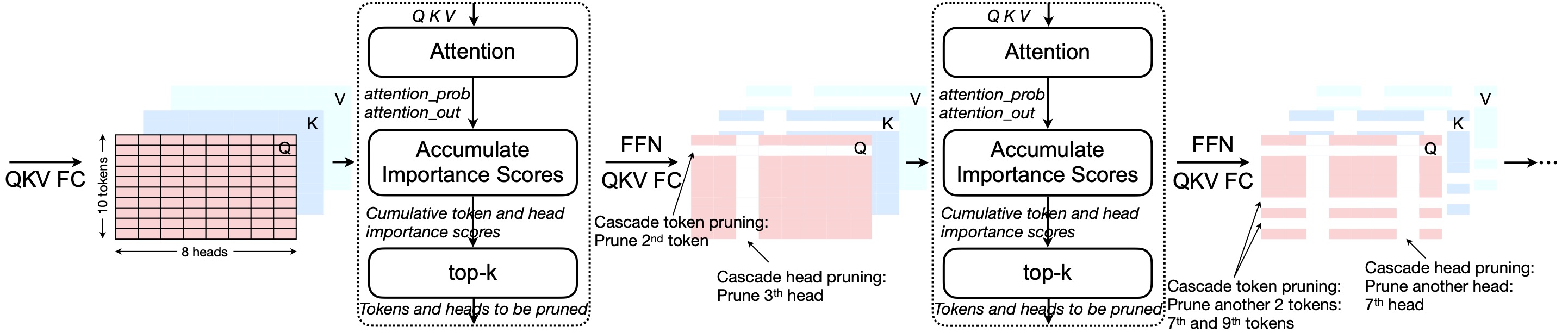

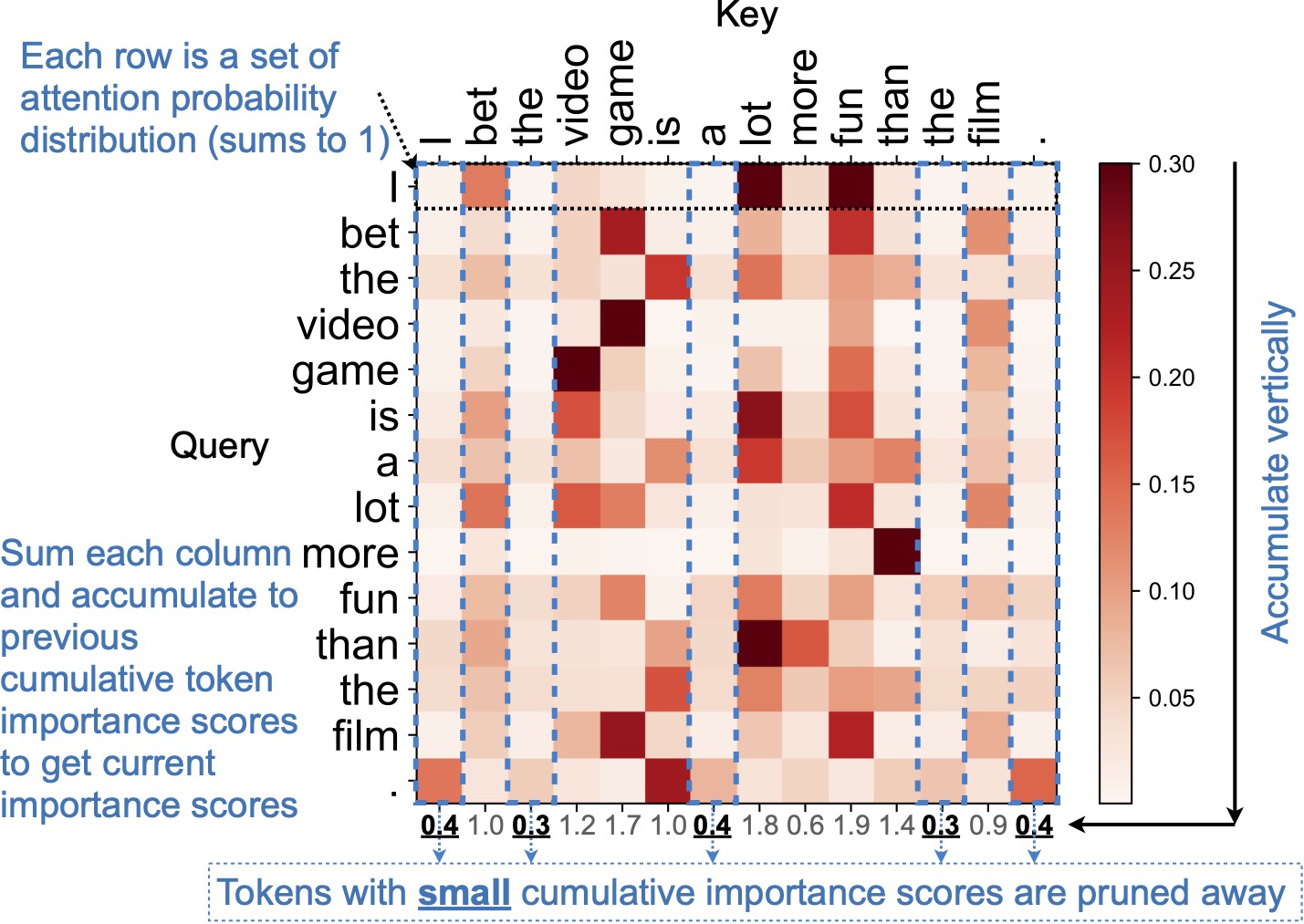

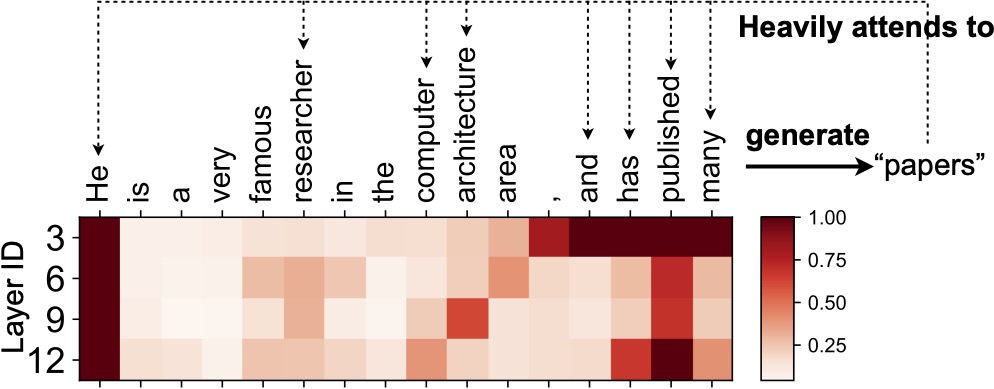

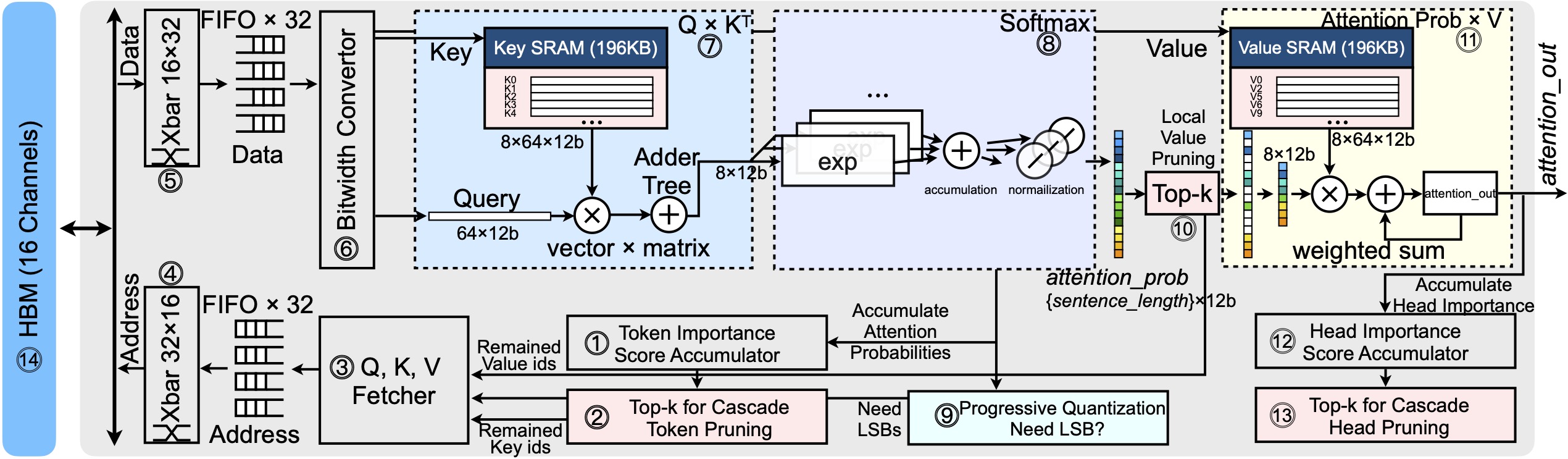

The attention mechanism is becoming increasingly popular in Natural Language Processing (NLP) applications, showing superior performance than convolutional and recurrent architectures. However, general-purpose platforms such as CPUs and GPUs are inefficient when performing attention inference due to complicated data movement and low arithmetic intensity. Moreover, existing NN accelerators mainly focus on optimizing convolutional or recurrent models, and cannot efficiently support attention. SpAtten is an efficient algorithm-architecture co-design that leverages token sparsity, head sparsity, and quantization opportunities to reduce the attention computation and memory access. Inspired by the high redundancy of human languages, we propose the novel cascade token pruning to prune away unimportant tokens in the sentence. We also propose cascade head pruning to remove unessential heads. Cascade pruning is fundamentally different from weight pruning since there is no trainable weight in the attention mechanism, and the pruned tokens and heads are selected on the fly. To efficiently support them on hardware, we design a novel top-k engine to rank token and head importance scores with high throughput. Furthermore, we propose progressive quantization that first fetches MSBs only and performs the computation; if the confidence is low, it fetches LSBs and recomputes the attention outputs, trading computation for memory reduction. Extensive experiments on 30 benchmarks show that, on average, SpAtten reduces DRAM access by 10.0x with no accuracy loss, and achieves 1.6x, 3.0x, 162x, 347x speedup, and 1,4x, 3.2x, 1193x, 4059x energy savings over A3 accelerator, MNNFast accelerator, TITAN Xp GPU, Xeon CPU, respectively.

SpAtten token/head pruning overview

Remove redundant tokens and heads with cumulative importance scores, thus reducing computation and DRAM access.

Visualizations

More visualizations and examples on how tokens are pruned based on attention probabilities.

On discriminative BERT Model:

- Bert for Sentence Classification. (Film sentiment classification, result: positive)

[Original] A wonderful movie, I am sure that you will remember it, you admire its conception and are able to resolve some of the confusions you had while watching it.

[Pruning Round 1] A wonderful movie, I am sure that you will remember it, you admire its conception and are able to resolve some of the confusions you had while watching it.

[Pruning Round 2] A wonderful movie, I am sure that you will remember it, you admire its conception and are able to resolve some of the confusions you had while watching it.

[Remained] sure remember admire resolve confusions

[Original] It does sound like your cat is upset about something, and trying to communicate it to you. [separate] Something is bothering your cat and he wants to tell you.

[Pruning Round 1] It does sound like your cat is upset about something, and trying to communicate it to you. [separate] Something is bothering your cat and he wants to tell you.

[Pruning Round 2] It does sound like your cat is upset about something, and trying to communicate it to you. [separate] Something is bothering your cat and he wants to tell you.

[Remained] sound cat upset and trying communicate. [separate] bothering

On generative GPT-2 Model:

- GPT-2 for Language Modeling. (English is the generated token.)

[Original] Du Fu was a great poet of the Tang dynasty. Recently a variety of styles have been used in efforts to translate the work of Du Fu into English

[Pruning Round 1] Du Fu was a great poet of the Tang dynasty. Recently a variety of styles have been used in efforts to translate the work of Du Fu into English

[Pruning Round 2] Du Fu was a great poet of the Tang dynasty. Recently a variety of styles have been used in efforts to translate the work of Du Fu into English

[Remained] Du translate into English

Hardware Architecture

The SpAtten architecture is equipped with specialized piplined datapath and operators for high throughput and energy efficiency.

Speedup and Energy Efficiency

SpAtten has more than 100x speedup over server-level GPU and CPU, more than 1000x speedup over edge devices, and 1.6x to 3x speedup over previous state-of-the-art accelerators.

Breakdown of Speedup over GPU

22x speedup is from specialized datepath, 3.4x from pruning techniques, and 2.8x from progressive quantization.

Citation

@inproceedings{hanruiwang2021spatten,

title = {SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning},

author = {Wang, Hanrui and Zhang, Zhekai and Han, Song},

booktitle = {27th IEEE International Symposium on High Performance Computer Architecture (HPCA)},

year = {2021}

}

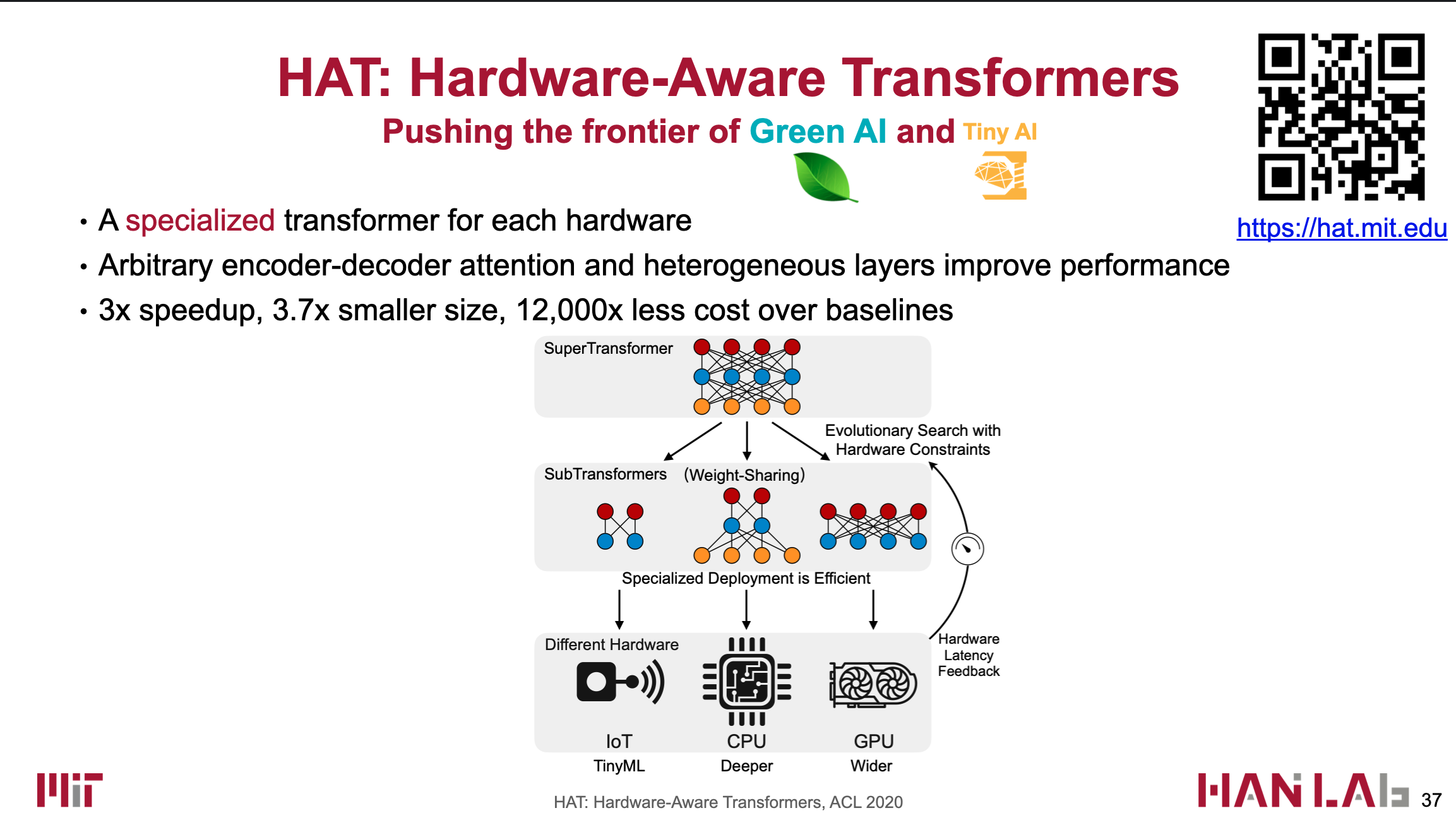

HAT Hardware-Aware Transformer NAS

Hanrui Wang, Zhanghao Wu, Zhijian Liu, Han Cai, Ligeng Zhu, Chuang Gan, Song Han

ACL 2020 Paper / Slides / Video / Code / Project Page

HAT NAS framework leverages the hardware feedback in the neural architecture search loop, providing a most suitable model for the target hardware platform.

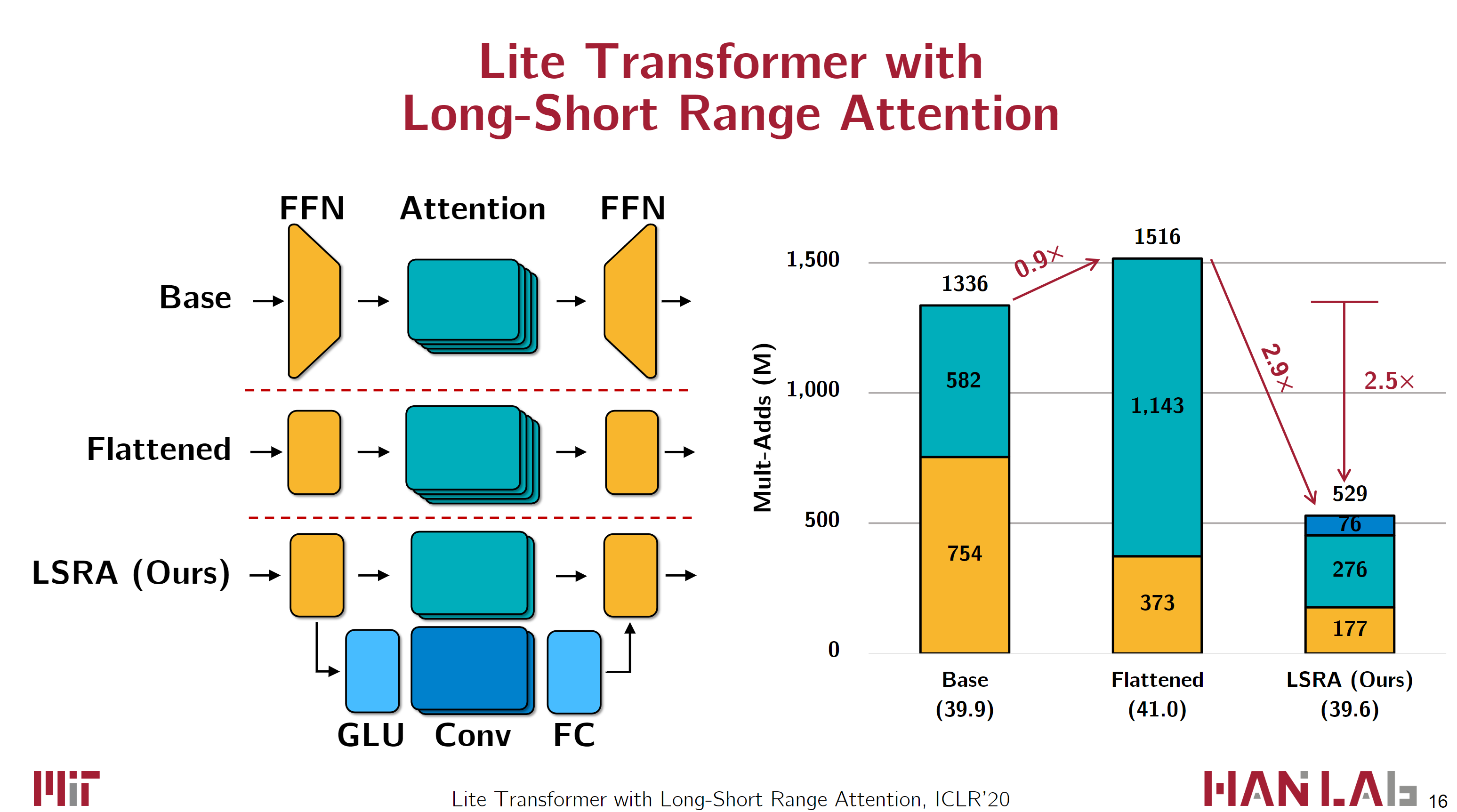

Lite Transformer

Zhanghao Wu, Zhijian Liu, Ji Lin, Yujun Lin, Song Han

Paper / Slides / Code / Project Page

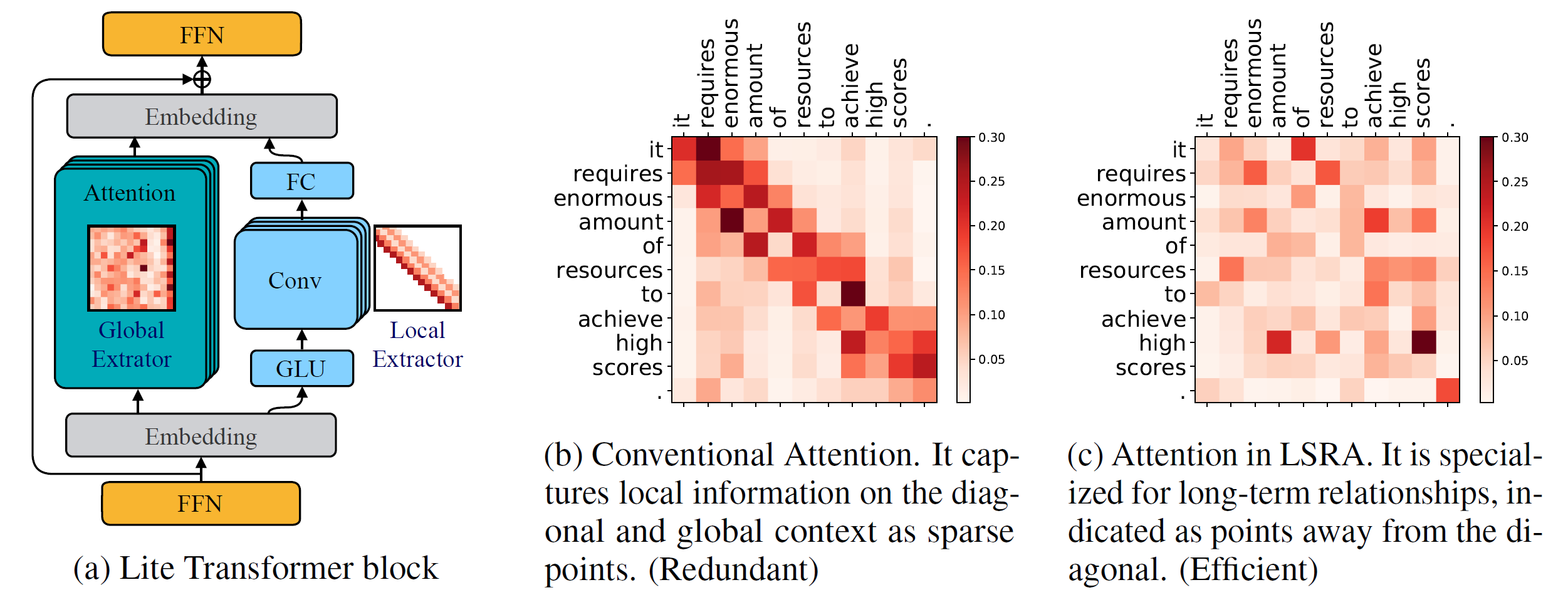

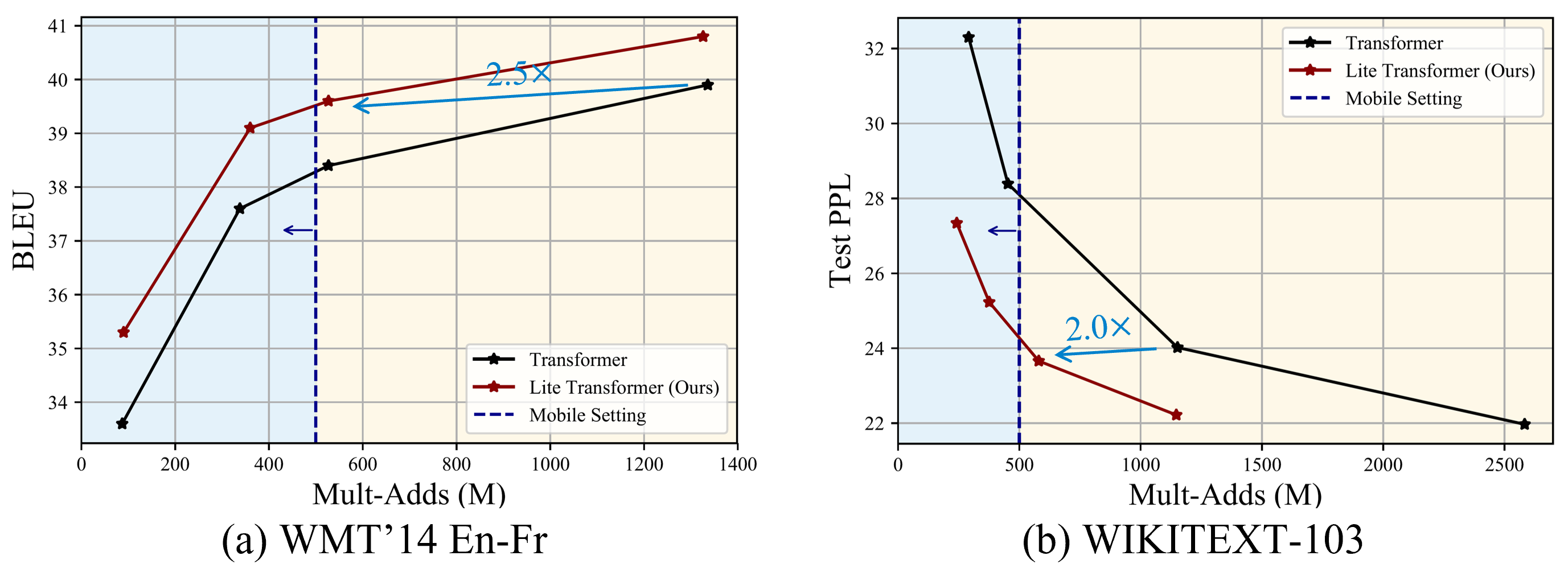

Lite transformer designs Long-Short Range Attention (LSRA) with attention and convolution as two branches

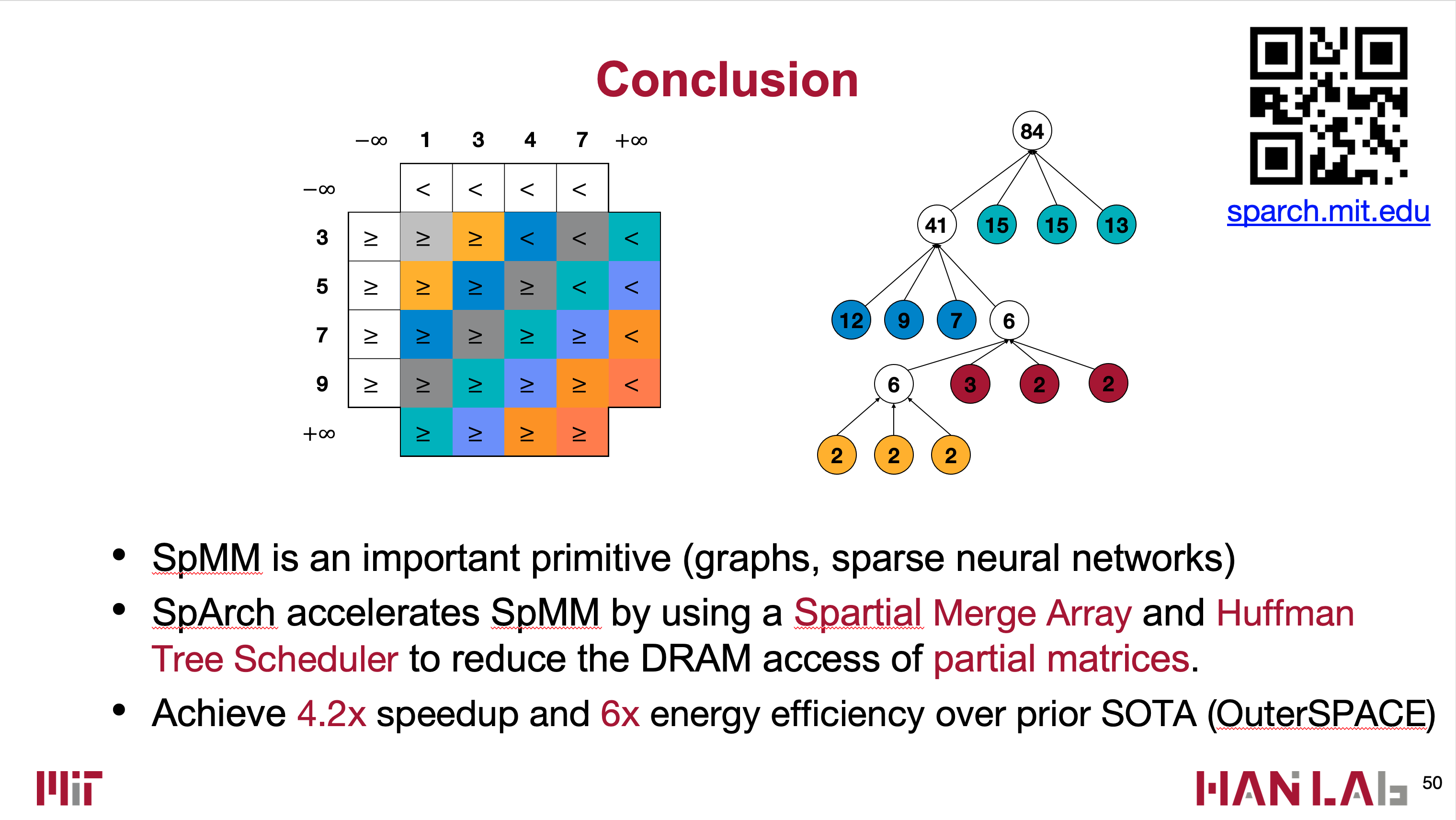

SpArch Accelerator

Zhekai Zhang*, Hanrui Wang* , Song Han, William J. Dally (* Equal Contributions)

HPCA 2020 Paper /

2-min Intro /

Intro /

Talk /

Slides /

Project Page

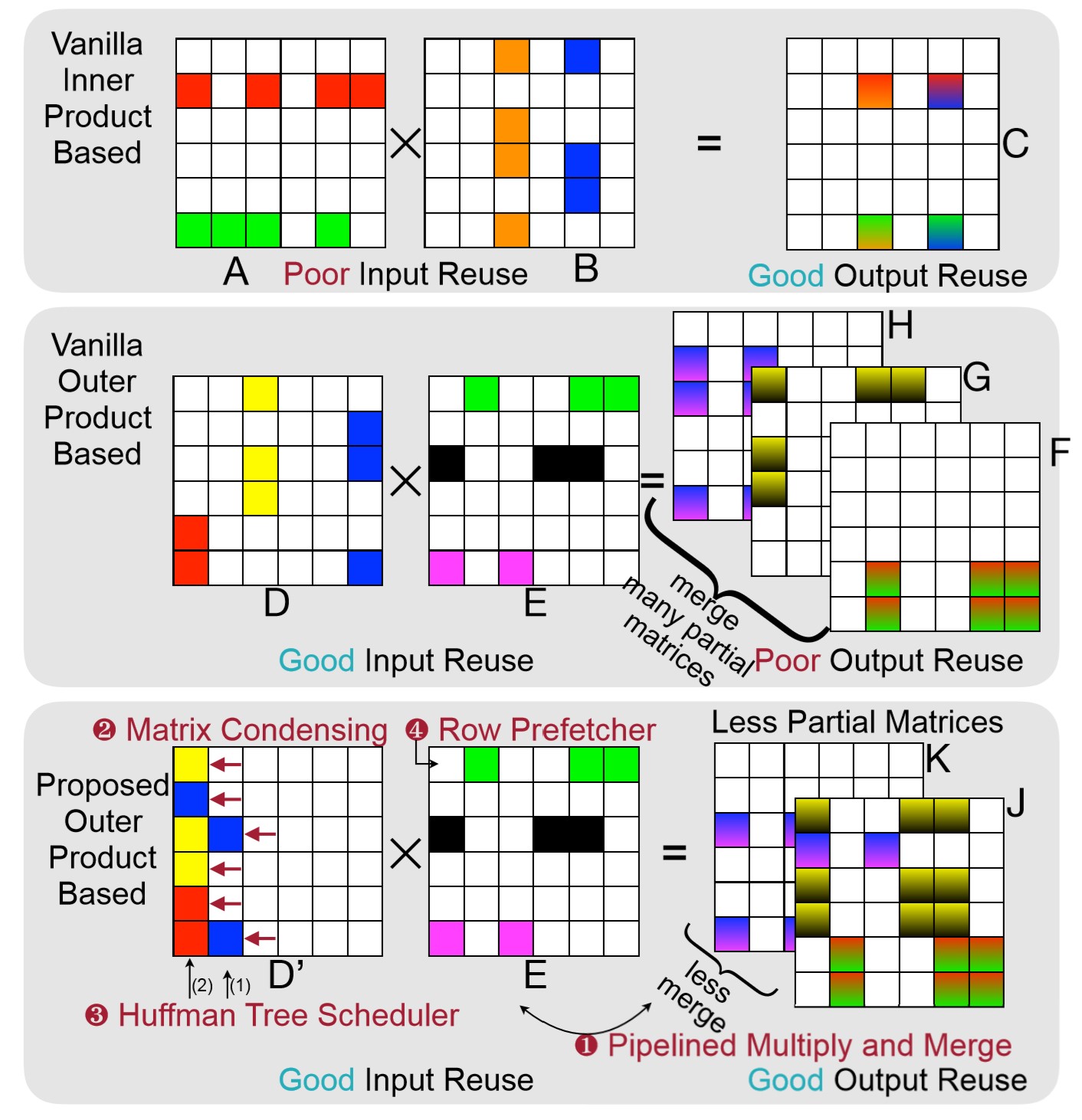

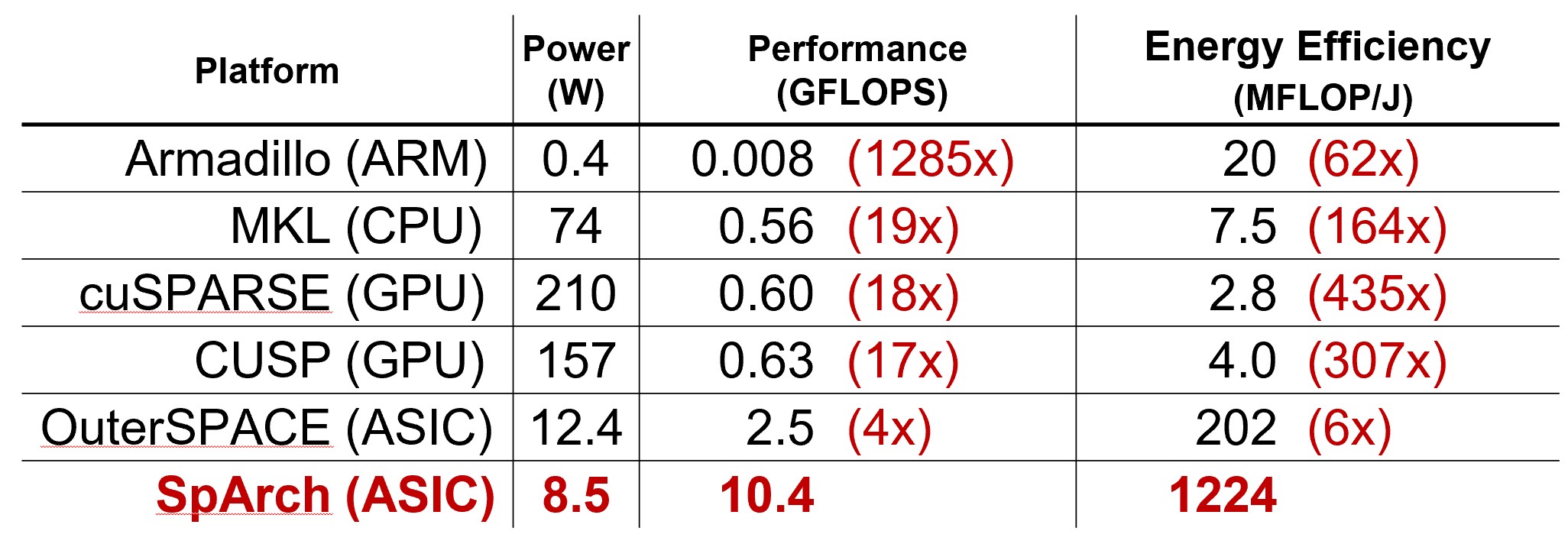

SpArch accelerator accelerates sparse matrix multiplications by joinly optimizing input and output data reuse.

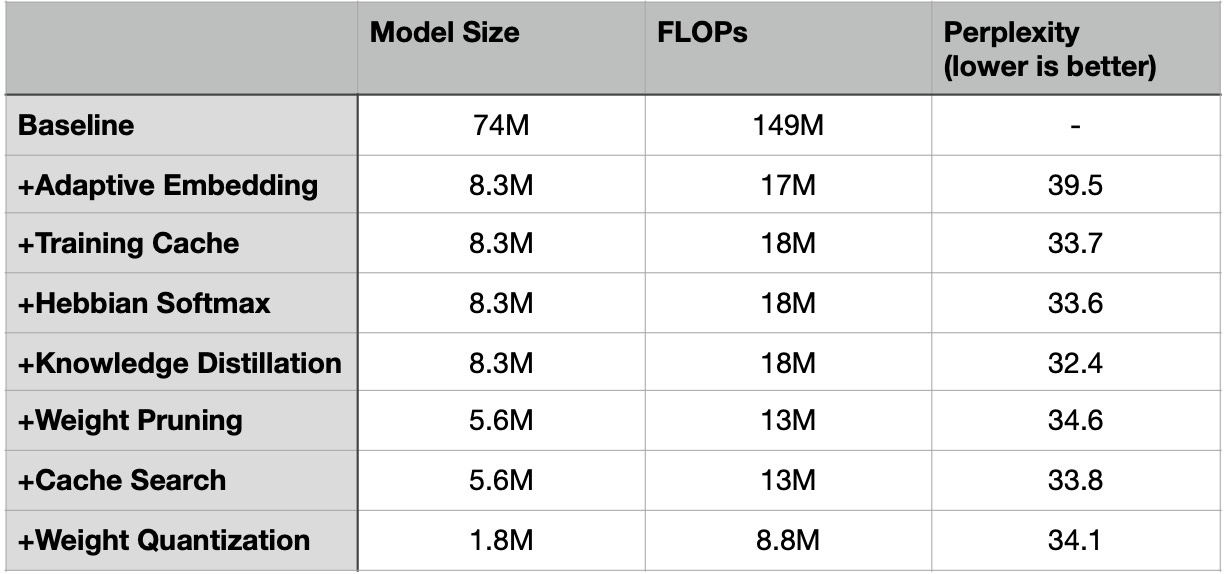

MicroNet Compression

Zhongxia Yan, Hanrui Wang, Demi Guo, Song Han

Paper /

Code /

Talk /

Project Page

NLP Micronet experiments on weight pruning and quantization of lanauge models. It reduces the model size by more than 41x and won the champion of NeurIPS 2019 MicroNet efficient NLP challenge.